One of the most exciting movements in learning, in myview, is personalization. In my company, we are beginning to see major projectsthat involve creating a personalized experience through learning portals andthe interplay of communities with online academies. How does this affect theinstructional design and development process? I’d like to offer you some of myown lessons learned in this new arena. But first, let’s consider two importantissues that affect your personalization efforts.

First issue: big data

Creating a complete learning ecosystem where learningjourneys provide relevant and focused content, while flexing to meet changingbusiness priorities, is one of the most satisfying aspects of working inlearning today. It’s what anyone who has worked in learning and knowledge overthe past decade or so knows has been around the corner, but it is now areality. However, as the possibilities grow, so does the data. Big data changesthe conversations that L&D has with the business. It opens the door to discussionsat the top table—there’s so much that can be measured, sliced, diced, andreported upon.

What this can mean in practice, however, is thatL&D, HR, IT, and the board can become paralyzed by the seeming complexityof what is needed. What do we do with all the data? Where does the data resideand what does it look like? On a practical level, the learning record store providesan ideal conduit between enterprise resource planning-level HR and LMS systems,customer relationship management, collaboration tools, intranets, and more, buttrying to get all the stars aligned causes one tremendous headache.

Second issue: competencymodels

Let’s look at this scenario.

You’ve been asked to deliver learning portals thatprovide a personalized view into “My Learning World.” That is, into what anindividual needs in order to:

- Progress their career

- Deliver against their objectives

- Align to the business strategy of the organization

- Have opportunities for talent development

- Receive content that matches personal likes and excludespersonal dislikes

- Feel incentivized and rewarded

And, by the way, every piece of content and eachinteraction must be top quality.

Let me ask you a question. Do you have a globalcompetency model that is entirely consistent across the whole company, fullyunderstood, and used from day-one recruitment through an individual’s entirecareer? Chances are you don’t and frankly won’t; at least not at a level that ensuresevery time an individual logs into their learning portal it is entirely up todate and relevant to what needs to be delivered and the learning that’s needed.

But let’s face it, although there are organizationsout there with very well adopted and scoped competency models, it’s only asmall piece of the whole story. It just happens to be a chapter in that storythat is great for creating data. But the pace of corporate life today means wehave to be very careful about what we commit to—the minute that model drives out-of-datecontent to employees all credibility is lost. (Perhaps because their HR recordshave not been kept entirely up to date—outrageous I know but it happens! Or amajor shift or opportunity faces our business to which we need to move rapidly.)This personalized learning experience doesn’t really know employees after allso they are back to finding the insights, advice, and tools they really needelsewhere; opportunity and time are lost.

Finding the path

So I prefer to start projects differently. Embedding evaluation into the ecosystem design and theimplementation of that design is essential. A great way to do this with true insightand depth is to work intensely with some well-chosen core business areas in anorganization, rather than an instant overall adoption by the entire enterprise.Let me explain how that works.

I spoke on a webinar recently about my preferredlaunch method, viral adoption. It avoids the tiresome “next big thing,” insteaddriving buy in that is built on a solid, tangible foundation. It’s easier forlearning strategists, designers, and facilitators to build content that meetsreal needs, and it reduces resistance to change from employees and managementalike.

Viral adoption means we can launch with some greatcore content, facilitate great conversations, support blossoming communities, andget those stories from the front line where the learning is having real impact.Learning paths create the scaffold—meaning that as learners complete formallearning, engage in workplace activities, access performance support content,and participate in conversations, the learning portal really gets to know eachindividual. The portal can evolve and grow based on real activity, on whatreally matters.

Another typical scenario is where a time-boundbusiness imperative requires rapid upskilling and collaborative problem solvingbetween colleagues to uncover new insights. This presents a real need with realbusiness measures attached, providing a clear method for measuring success ofthe interventions. Engagement with formal learning experiences, with conversationscreated, and with shared resources and measures of community health, providesinsights that help map how successful the learning content and facilitation havebeen in delivering key business goals. Again, a viral approach succeeds becausethese learning programs emerge as needed, and its significance to the businesshelps drive adoption while it provides great data for use by management.

The result is that the data we can get is trulyvaluable, truly insightful. We may start with page impressions, content rankingand recommendations, numbers of posts, etc., but this really only tells us ifwe got the design right and our internal communications campaign worked.

What we want is to understand how our learningecosystem is transforming our organization and delivering on our strategy. For this,we have to look beyond typical measures. What often happens when onlinelearning is at the heart of the solution is that we look to visitor numbers,posts on discussions, cost saved, scores on knowledge checks, amount of contentconsumed. This is understandable as we want to see if what we have created isactually being used. But ROI isn’t about how often learners visit the site, howmany points they’ve earned on the leader board, or how much stuff they haveposted.

Value chain analysis keeps interventionson target

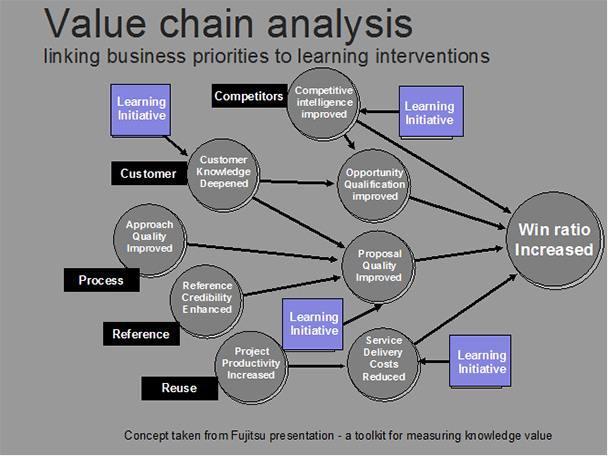

What matters is how impactful this behavior is onday-to-day working life and the lives of colleagues in delivering theirobjectives. It means the way we evaluate learning interventions needs to startwith the change we are trying to instill and with ensuring that the learningecosystem delivers exactly the business results intended. A popular model weuse with our customers is value-chain analysis,ensuring the learning interventions deployed at each stage of the ecosystem arecorrectly targeted at the company objectives.

This illustration of value chain analysis, borrowedfrom Fujitsu, uses the sales team objective of increasing the win ratio (Figure1). Each intervention will be aiming for a specific outcome, for which therewill be assumptions. Interventions must be measured against the intendedoutcome. The better the match between assumptions and actual conditions, andbetween intended outcomes and actual results, the more effective theintervention.

Figure 1: Valuechain analysis

This is a somewhat different approach compared to thetraditional Kirkpatrick model, which is still needed in the right places, but value-chainanalysis does a great job at getting to what truly matters.

Using this approach enables us not only to buildlearning portals that provide the right combination of performance support,collaborative learning, and career management but ensures that we can map the qualityof each of the resources at each level against real business outcomes.