Your cart is currently empty!

Convert Effective Instructor-led Training to Captivating eLearning

TheInstitute for Creative Technologies at the University of Southern California(USC-ICT) is a US Army university-affiliated research center. This centerconducts research and development for training across multiple domainsincluding simulations, games, artificial intelligence models, virtual and mixedrealities, and more. This article is a case study of learning technology workmy organization is doing for USC-ICT.

AmongUSC’s many programs is one titled Captivating Virtual Instruction for Training(CVIT). A portion of the stated goals of CVIT puts it this way: “CVIT is amulti-year research effort seeking to produce blueprints for mapping effectiveinstructional techniques used in successful live classroom settings to coreenabling technologies, which may then be used for the design and development ofengaging virtual and distributed learning applications.”

The challenge ofcreating captivating distance learning

Let meunpack that statement. There is a purposeful focus within CVIT around the word “captivating.”The user experience (UX), the learning experience: Is it captivating? The USClearning and behavior scientists are looking to take the concepts that makepopular and effective live training “captivating,” and keep it captivatingwhile digitizing and blending it into distance learning. There is a tremendousvalue in the training content’s holding your attention and keeping you engaged.This particular content has been designed with learning science, is considereddocumented training materials or platform instruction, and is deemed to beeffective at meeting a standard of performance when delivered by theinstructor. Considering that instructor-led training, or ILT, has often beenreferred to as “death by PowerPoint,” there isgood reason to develop examples of captivating distance-learning blueprints.

Badgingand achievements

The USC-ICT behavior scientists were looking to take aninstructor-led information assurance (cybersecurity) training course, bring thetraining into the digital realm, and make sure it is captivating. The coursetitle is Intelligence Architecture Online Course (IAOC).

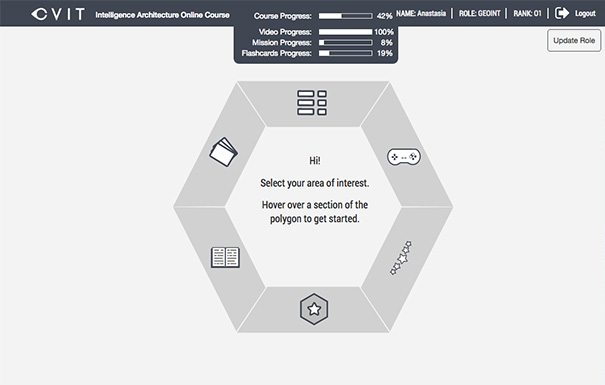

USC reimaginedthis certification course and included the requirement to deliver differentrules or training scenarios based on the seniority and role of the learner. Theentire training is estimated to take up to 10 hours for each role. There areseveral video instruction modules, a video game, assessments, flash cards, andan xAPI-populated learningexperience with badging and progress indications (Figure 1 shows thelearner portal). Withinthe courseware framework, IAOC is designed around a simulation game in whichthe learner is given training tasks to complete in a certain amount of tries. An xAPI-populated learningexperience means that the entire UX is populated with data from the learningrecord store (LRS). A tremendous amount of data is being shared with individualsabout where they are in the training and how they are scoring in terms ofcompetency.

Figure 1: Main learner portal of IAOC

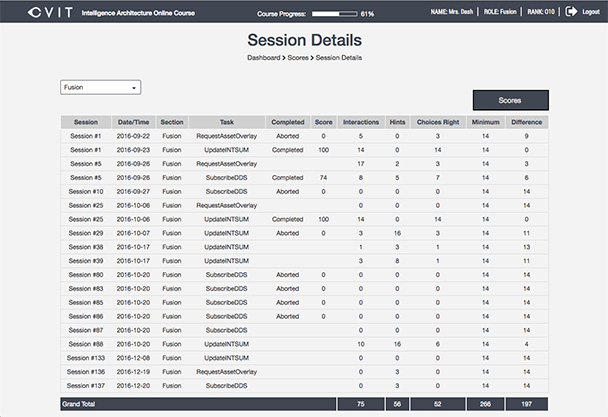

For the most part, this translates to a badging andachievements dashboard (shown later in Figure 4). The interaction data for eachvideo game session is also made available to the user (Figure 2). What’s more,all the training data for all the users in aggregate is available to theinstructional designers or scientists, and we are looking forward to seeing howthey use it to verify, sustain, and improve this training. USC has access tothe raw interaction data in their LRS. They had specific xAPI reportingrequirements designed to enhance UX and display in a learner’s view.Additionally, IAOC supports the followingtwo use cases: to be delivered stand-alone by individual course participants,or to be part of an instructor-led course accessed from the classroom. Theinstructor module supports blended learning (e.g., instructor-in-the-loop).This training also needed to be launched from an institutional LMS (see my priorarticle, “Remote HTML5 and xAPI Can Work with Your LMS”)and provide authentication access.

Figure 2: Game mission details tolearner based on role

The foundational approach uses an HTML5 courseware(eLearning software) framework that includes both pre-baked xAPI recipes and amethod for adding additional xAPI tracking. General concepts such as activity,page, and topic relate specifically to how the eLearning software is set up. Acourse is made up of one or more topics, and each topic comprises one or morepages. An activity is an interactive section such as a drag-and-drop exercise,scenario, video, or assessment, which is usually on a single page.

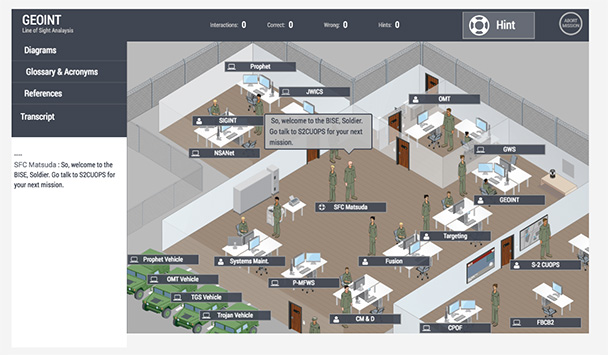

The game is created with Phaser.io (an open source framework for HTML5 Canvas andWebGL-powered, browser-based games). Figure 3 shows a game view. Everything is reporting xAPI to the LRS.In the case of the Phaser.io game, we developed what is called an xAPI clientor middleware. It is not necessary for every vendor or technical engineer outthere to know xAPI in order to get the technology to report xAPI. If thetechnology can publish messages, it is possible to subscribe to them andconvert the messages and data into xAPI.

Figure 3: Phaser.io game view of a mission in progress

This is referred to as a publish-subscribe pattern, or pub/sub, and itis a great fundamental to remember when you want to get different softwaretalking xAPI. If you have a pub/sub option available, it removes the need forthe proprietary software engineers to understand xAPI—they simply need topublish events. Google Analytics is probably the best example to describe themethod. Have you ever used Google Analytics? You just drop a little JavaScripton your HTML page and it works! That little JavaScript is a client. It’s the sameconcept.

Table 1 provides an example of some of the initial xAPIreporting requirements given by USC.

Table 1: Some of the USC xAPI reporting requirements

Metric | Formula | Notes |

Scenario Score | # Correct / (# Correct + # Wrong + c * # Hints) | For a single scenario attempt. c is a constant between 0 and 1, which might be manipulated at the user level (e.g., should be an on-create property for a user). |

Scenario Mastery | Avg (Scenario Score of Last Three Attempts) | One of these for each scenario. |

Role Mastery | Avg (Scenario Mastery for Scenarios in Role) | One of these for each role. |

Role-Type Mastery | Avg (Role Mastery of Roles in the Type) | My Role, Other, or All. |

Role Efficiency | # Correct / (# Correct + # Wrong + c * #Hints) | For all scenario attempts in a role. |

Entity Efficiency | # Correct and Clicked on Entity / (# Correct and Clicked on Entity + # Wrong) | For all scenario types, when “entity” was a valid answer. Only consider when “Wrong” or they clicked on “entity” (e.g., don’t count if they clicked other right answers). |

The badging and achievements engine

As mentioned earlier, IAOC is designed around asimulation game in which the learner is given training tasks to complete in acertain amount of tries. The total training focuses on three categories ofactivities, all using xAPI to track interactions:

- Knowledge—CoreIA comprehension (text, interactive diagrams, videos, flash cards).

- Application—Applyingknowledge to problem scenarios (game-based learning). This is a video gamedeveloped in Phaser.io.

- Evaluation—Causalperformance reviews (performance screens based on learning metrics).

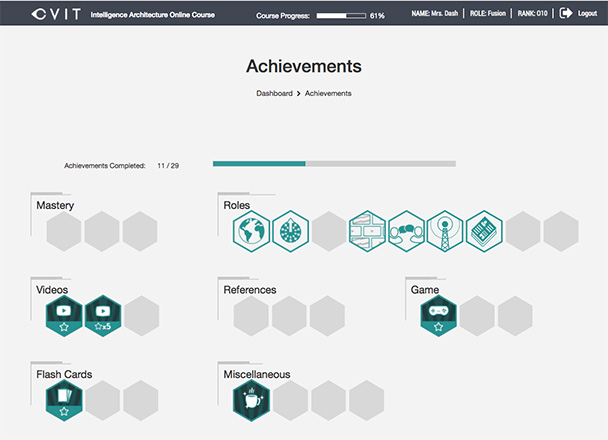

xAPI is used to record statements each step of the way,and the HTML5 courseware application displays collections of those statementsas learner achievements and badges. USC needed a system of badges andachievements with criteria of actions or successful activities that, when met, “earnthe badge.” Once all the badges are earned, the learner is ready to certify(Figure 4).

Figure 4: IAOC badging and achievements

Rather than hard-coding the rules for badging, creatingan “achievement engine” helped to abstract away the logic into more of aservice. We can keep these systems reusable and interoperable when we thinkthis way. For instance, if today one must complete five videos to achieve avideo badge, and you as the instructor want to add another step or rule to earnthat badge, it should be simple to change the rules for achievements. Multipleinstructional use cases point to a badging system or service, and some of thesebadges can represent core competencies or critical learning paths beingachieved. What defines mastery may change. In the case of an achievementengine, we calculate a stream of xAPI activities and results while ensuring itcan change according to the learner process models (or business process flows)needed at a given time.

Today’sperceived value of eLearning

Interactivemultimedia instruction (IMI) is still a relatively new art and science. GoodeLearning requires skills in instructional design, cognitive sciences, mediaarts, and computer science. Even in the realm of platform instruction, manyinformation-centric page turners provide low learning impact and low business impact—whenthat kind of information is merely shoveled onto a page, the result is pooreLearning.

I think there is a growing school of thought that is pushing to do away withcourseware, or what we can call “traditional” IMI. To me, this is like throwingaway the baby with the bathwater. There are affordable, open-sourcealternatives to Flash that work better in our world today. The rapid pace oftechnology has now led some to use derogatory terms for interactivepage-turning courseware, such as “shovelware” or “data dumps,” as if somethingalong the lines of an interactive textbook that may have all types of mediaintegrated into the UX is a bad thing. The technologies and frameworksavailable today as tools do not make the smart decisions for us. We need tocreate and program the tools, and provide the rules for smarter distance learning.As an aside, that is why something like xAPI is so powerful in understandingthe result of your instructional design work.

Historically,we have not been able to easily get actionable or historical interaction datafrom learning products, good or bad. So, I think it is unlikely that using thebuzzwords “social learning” and “microlearning” is going to magically fix aproblem, if we do not create and publish a decent IMI product in the firstplace. I am not speaking of the instructional designer who has the whole worldupon her shoulders, no budget, no technical tools, and the mandate to just “makeit better.” I am trying to talk to her leadership. Also, I do agree that socialand microlearning strategies are extremely viable. I work and look foradvancements in all of IMI, and I do think real progress is happening in alldevice-agnostic IMI. Hopefully today, the key to better training ROI is tosimply pick the right tools for the job.

The pathto adaptive learning and automated tutoring

Looking forward, we can develop logic and rules based onxAPI/LRS data to better serve IMI. We can even begin to adapt to the trainingevent by querying the LRS on behalf of the learner, and/or mining and reactingto user interactions as the data is reporting to the LRS. Remember, the LRS issimply learning-activity-stream data. It holds the statement that the learner “mastered”something, and the stream of activities and verbs it took to get there. If youwant to intervene or adapt the training, you can create rules based on xAPI/LRS-capturedresults.