It seems that we’ve reached a tipping point in the field of eLearning development tools. All of the newly introduced tools lately are cloud-based, and they address mobile learning head-on. In addition, many also respond to the market’s demand for easier authoring of microlearning and gamification.

mLevel is one of the companies that has brought to market a viable option that addresses these needs and then some. For instance, it does a very good job of addressing analytics, which is a focus area near and dear to me.

The approach that mLevel takes is interesting and different and may very well be an excellent solution to a problem that many developers experience: needing to store content in a way that makes it easy to repurpose. The formatting of the content is separate from the content itself, allowing you to serve up the same content in different formats.

Objectives > missions

Just as is true in any learning endeavor, you always start with a learning strategy that will then lead to a set of measurable objectives. In mLevel, these form the basis for what are called missions (Figure 1). A mission is, in essence, a topic that addresses an objective. You may have several missions, of course, for the learning strategy and for any objective.

Figure 1: Setting up a mission

At this point, an instructional designer will normally begin to create questions and content. Instead, here it would be wise to stop and think. You’re not creating questions for yourself; you’re creating them for learners. In fact, in mLevel you don’t have to worry about creating all the questions yourself. The cool thing about mLevel is that if you provide the content, it will generate the questions for the learner in an unbiased way. Hard to believe? Keep reading.

Creating the content grid

How do you provide the content? Again, start with the objectives and begin to build a grid of the knowledge that would allow learners, once they gain that knowledge, to meet those objectives.

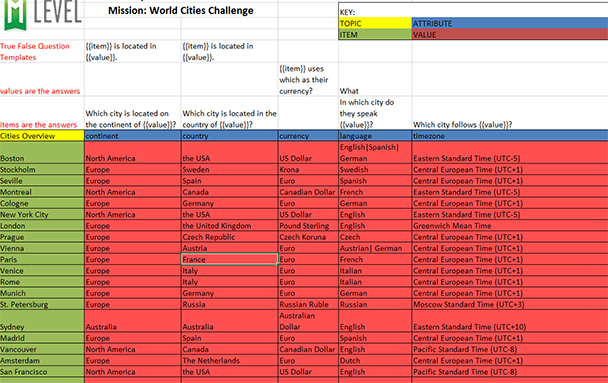

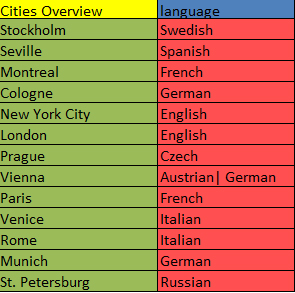

In mLevel this is called the content grid, and it looks something like the one in Figure 2. You can construct the content grid on the platform, or you can build it in Excel.

Figure 2: A typical content grid

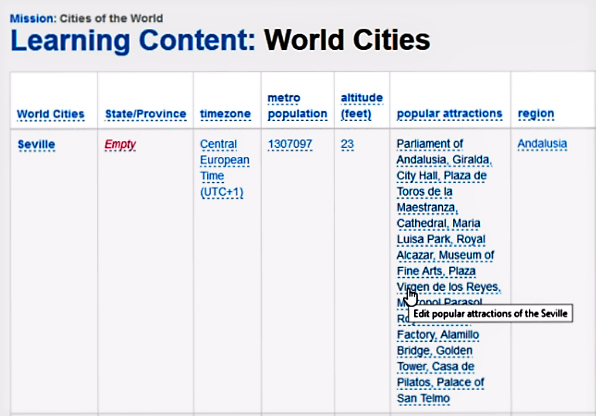

The top left cell is the actual topic name, Cities Overview. The left-hand column is where you place the items related to that topic, such as Boston and Sydney. Across the top, you indicate the attributes for each of those topic items—the qualities of each item that you want users to learn, such as on which continent and in which country the city is found. The intersection of an item and an attribute is a value. In fact, in the intersection, you may include multiple values, all of which would be considered correct. An example might be at the intersection of “Seville” and “Popular Attractions” where you would include all possible attractions. See Figure 3.

Figure 3: Multiple correct answers

Creating the question templates

Once you’re done (and you can always edit the content grid later), it’s time to create the question templates for the information in the content grid.

Question templates can comprise:

- Single-answer multiple choice

- Multiple-answer multiple choice

- True or false

At this point, don’t think that you must build everything and generate questions only from the content grid. Other types of questions, such as image hot-spot and image-based questions, are available through other platform activities. More on that in a bit.

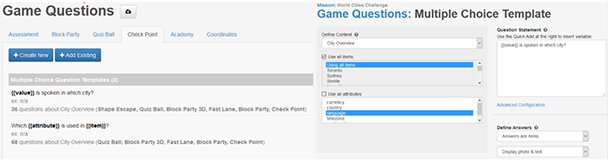

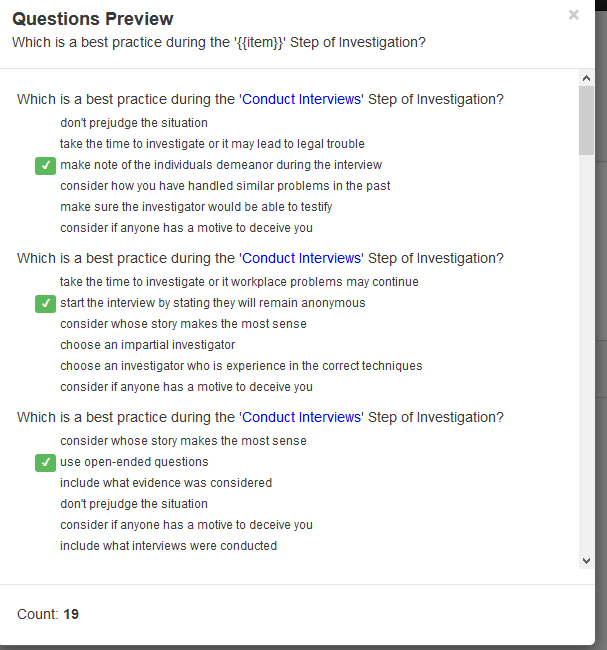

In Figure 4, you see a typical question template design screen, this one as part of a learning game. By building the content grid and a question template like this, you will avoid spending many hours creating questions from scratch. mLevel will generate dozens of questions in two seconds (literally).

Figure 4: Setting up a question template

As you can see in both Figures 2 and 3, the intersection of an item and an attribute is where you put the correct answers. So then how does mLevel choose the wrong-answer distractors when generating a multiple-choice question? It does this by choosing answers from the attributes of other items in the grid. For example, in Figure 5, when mLevel creates a question regarding the language spoken in Prague, it might list as answers:

- German

- Italian

- Czech

- Russian

Figure 5: Choosing wrong distractors

By the way, I find that the engine that generates the questions is pretty smart. It won’t choose two identical distractors from the attributes of different items, ensuring that you won’t end up with a confusing question. So when creating this question, mLevel won’t list English twice (nor German, Italian, or French), despite the fact that it appears more than once in the list in Figure 5, because the program recognizes that the answers are the same.

The mLevel company tells me that many instructional designers have said generating questions this way helps them avoid introducing bias into the questions. Of course, you can further edit the questions.

Easy SME reviews

Next, you can generate a Microsoft Word document containing all of the possible questions that mLevel can generate for your learning activities, such as the one seen in Figure 6. Send the document to your subject matter experts, and they can then review all the questions easily, mark them up for you if need be, and allow you to further enhance the effectiveness of the questions.

Figure 6: Questions preview in Word

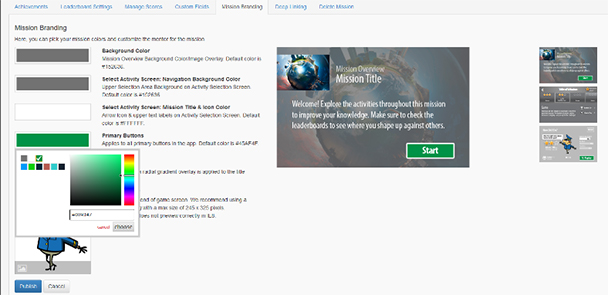

Make it your own

The platform lets you brand the activities so that they reflect your organization. That includes imagery and color palettes. You can customize the look of the activities to make it match any other lessons or style guides you have. See Figure 7.

Figure 7: Branding the activities

Select the activities you’re going to use

Aside from the content grid, mLevel offers 16 activities, which pertain to each of the different levels of Bloom’s Taxonomy. The program makes it possible for designers to mix and match activities to fit their various learning objectives. For example, mLevel’s game-based activities (such as Block Party and Quiz Ball) help users remember the information before they can continue to a more advanced activity (such as Pathfinder) to apply that information in a scenario-based training activity. It’s easy to add these activities to missions by selecting them from a list and then assigning questions to those activities. Most of the activities are game-based, and the company used Bloom’s Taxonomy of Learning Objectives to ensure you could use them effectively. Figure 8 shows how the Fast Lane activity looks.

Figure 8: The Fast Lane activity

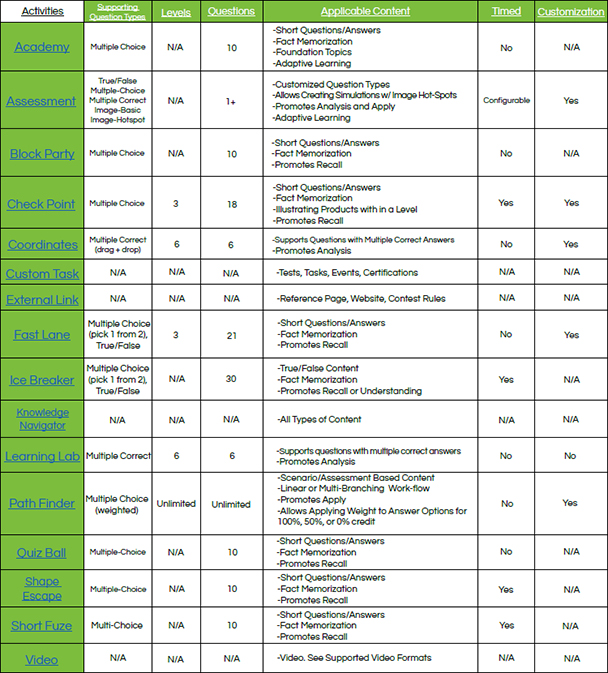

The activities include those in Figure 9, where you can also see information about each one.

Figure 9: All mLevel activities

Each mLevel activity asks a different number of questions based on a game’s needs. As you can see in Figure 9, certain activities are relatively short and ask six to eight questions, while others are longer and ask 20 to 30 questions. There is no limit to the number of questions you can assign to each activity. You should, however, assign at least 50 percent more questions than you plan to generate for a test so as to ensure that learners don’t see the same questions every time they play.

Audio and video

Audio does not seem to be used much in mLevel activities, though video is used quite a bit. That’s not to say that audio isn’t supported—just that I haven’t seen much use of it. The focus seems to be on using videos. In fact, to find which audio files are supported, you have to look on a page called Supported Video Formats.

There’s no way currently to upload a number of media files and have them reside on the mLevel servers in a resource library. Each time you want to introduce a video, for instance, you need to upload it from your computer or link to it online. This is not a show-stopper, but it would be nice to organize my media files in advance of using them. I’ve suggested that for a future version.

Analytics!

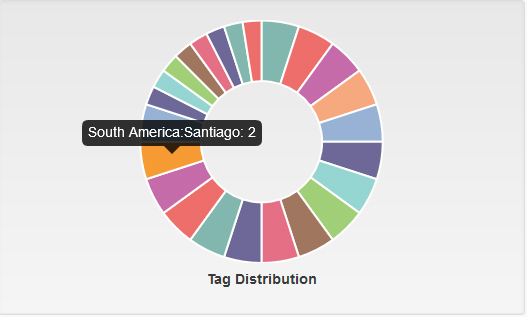

mLevel also uses the content grid to provide analytics on the back end. It does this by tagging questions to remember the knowledge intersections used to generate the correct answers, such as the fact that Chile is in South America and that Santiago is its capital. A pretty cool interactive color wheel shows you the tag’s distribution. See Figure 10.

Figure 10: The tag distribution color wheel

The platform then provides five different types of analytics dashboards.

- Completion: Certifies which users have taken the mission and which of them have completed it. See Figure 11.

Figure 11: Completion analytics

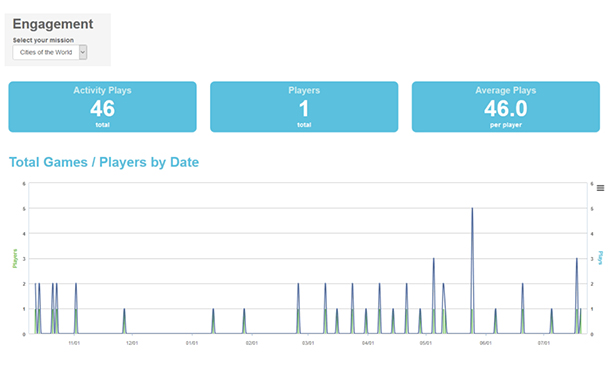

- Engagement: Measures how many users have taken a certain mission, when they took it, and the average number of times each user took the mission. See Figure 12.

Figure 12: Engagement analytics

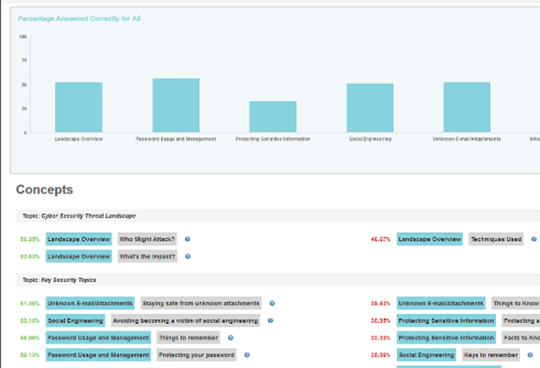

- Knowledge: Identifies the questions users are struggling with, along with the ones that they understand, by measuring the percent of questions they get right about those specific topics.

- Performance: Measures how users are answering certain questions. This dashboard can be filtered to see which questions users get wrong the most often, allowing you to determine whether those questions are worded properly or whether there is an actual knowledge gap. See Figure 13.

Figure 13: Performance analytics

- Leaderboard: Shows the same leaderboard that users see in the platform, so administrators can see who is scoring the highest in game-based activities. See Figure 14.

Figure 14: Leaderboard

You can also export these reports as Excel spreadsheets, allowing you to combine data from multiple missions or break it down even further to identify knowledge gaps.

Publish for desktop and mobile

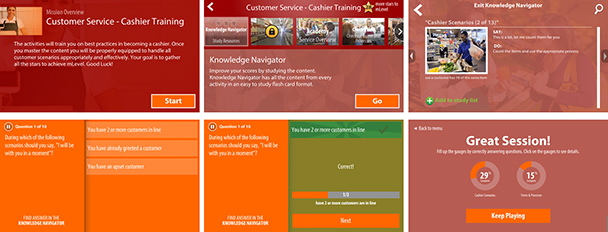

Once you publish your mLevel activities, learners will be able to access them equally well on mobile and desktop. I’ve taken a look at the demos on the mLevel app, which is free to download on your mobile device, and they look very good. See Figure 15, where I screen-captured six examples from my phone.

Figure 15: Mobile screen examples

Limitations of mLevel

- mLevel is currently not compliant with Section 508 of the Rehabilitation Act. However, I’m told that this capability is imminent. You may have to test mLevel thoroughly for accessibility compliance when working on US government contracts or on projects for companies that adhere to Section 508.

- mLevel started as an eLearning or mLearning tool geared heavily toward gamification. There’s nothing wrong with that, but in my view, games should not be the primary focus of learning. Most of the mLevel activities seem focused on games. I hope to see more activities added that are not based on games.

- You cannot download or upload missions. However, you can embed mLevel activities in any tool that allows for an embedded web window, or you can launch a separate web window for those authoring tools that allow you to do so. Most authoring tools today allow for embedding web windows and launching.

- mLevel is not SCORM-compliant. However, you can link to an mLevel activity from an LMS, and you can also transfer data via the xAPI to a learning record store.

Pricing

You pay for mLevel licenses according to the number of active users you have per month and other factors. There are some initial fees, after which you’ll pay $20 per month if you have only one user, down to $2 a month per user when you have 20,000 learners or more, which is obviously cost-effective for organizations large enough to have that many learners. There are discounts when paying in advance. It’s best to contact mLevel to see what your costs would be.