We all want training that is effective and efficient. And we all know that strategy is required. So why is learner activity data often limited to training completion and multiple-choice knowledge checks? One answer might be that the most common learner activity data protocols (like SCORM) are limited. But the nature of supply and demand suggests that there is a deeper reason: a lack of understanding that the relationship between design and data defines good strategy.

“Strategy” is an easy buzzword because it is objectively desirable: everyone wants to claim that their training is grounded in strategy. But strategic instructional strategy requires three key steps before development even begins:

- Identifying learning objectives (the goal)

- Designing the learner experiences that you believe will lead to the achievement of those objectives (your theory about how to reach the goal)

- Planning data collection to allow evaluation of your design (testing your theory)

Science of strategy #1: Identifying learning objectives

Learning objectives direct all other work. They should address the needs of your organization and of your learners, and they should be clear and succinct enough to measure.

A. Assess business goals and learner needs

Organization goals and learner needs must both be met by the learning objectives. Both sets of needs must be assessed and then combined.

Start by asking a diagnostic question: What is the most salient issue? Then ask why. In fact, ask why five times. Initial answers will almost always be symptoms rather than causes. The “Five Whys” technique is one useful root cause analysis that reveals the definition of the true problem. Other follow-up questions can help you narrow in.

Recently, while designing an online introductory course about autism, a root cause analysis sparked the realization that our main focus should not be knowledge development—it should be paradigm shift through knowledge development. Specifically, the goal was to identify (lack of) support as a more salient cause of (disruptive) behavior than child traits. In large part because we took the time to identify the true needs, this course has been incredibly successful.

B. Chunk and task analyze your learning objectives

Once you have accurately defined the issue, it’s time to translate it into action: What should this training enable learners to do that they couldn’t do before? Then, in a process parallel to root causes analysis, ask what that means. In order for learning experiences to map onto actual experiences, objectives must be chunked and task analyzed down to their smallest parts.

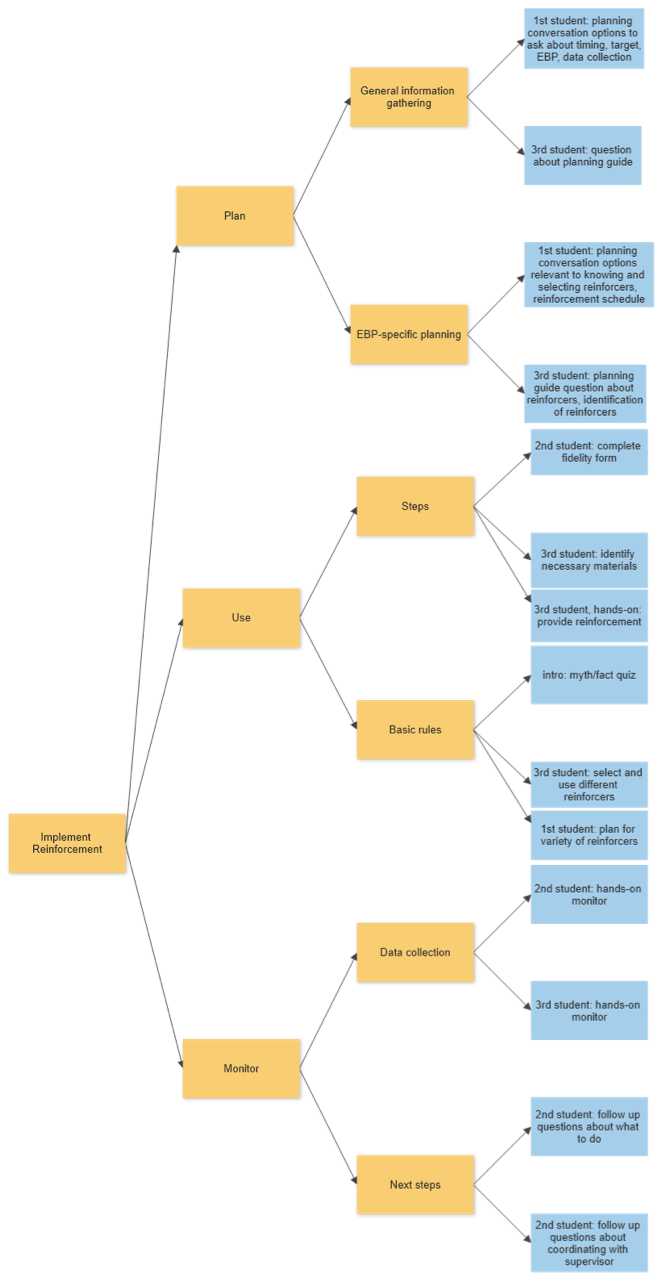

As an example, we wanted to develop the skill of implementing the evidence-based practice of reinforcement. Implementing broke down into the tasks of planning, using, and monitoring reinforcement. Each of those three steps was complex, and different pieces of tasks belonged to different roles. Because our target audience was paraprofessionals (paras) who are supervised by teachers, we had to delineate which tasks paras vs teachers needed to take on. We also needed to make decisions about training boundaries: What skills should we provide to those whose supervising teachers weren’t doing their jobs well? Breaking each larger task down into smaller actions enabled us to clearly delineate our learning objectives, which laid the foundation for designing relevant learning experiences.

C. Construct measurable objectives

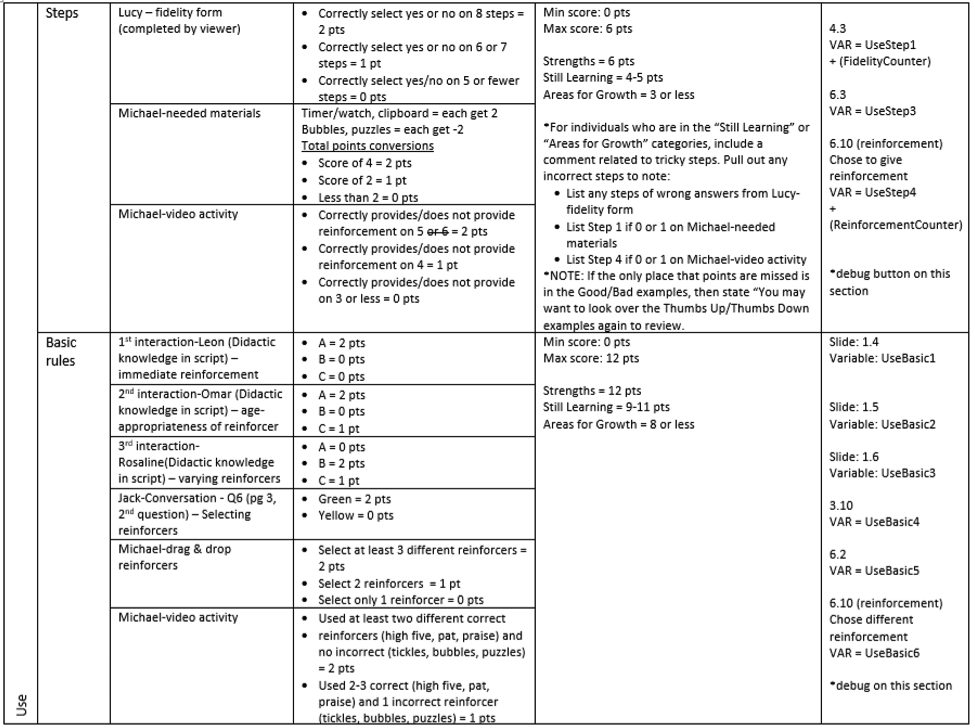

Breaking your first answer down into smaller and smaller pieces should result in not only well-organized and relevant learning experiences, but also uniquely measurable sub-tasks that will inform later evaluation. To facilitate this, make sure that your objectives are measurable. “Know” and “understand” are not measurable objectives. Broad objectives such as “use” should be defined more specifically by sub-objectives like “use at least two different, correct reinforcers” and “provide reinforcement at the appropriate time.”

Science of strategy #2: Designing Learner Experiences

While the design of learner experiences is perhaps the most widely recognized instructional design function, the strategic utility of this work is not as well understood. When you propose learner experiences you believe will lead to the achievement of learning objectives, you are in essence proposing a theory of change—a hypothesis about the relationships between learner experiences and learning objectives. This theory is most likely to be supported if you a) clearly delineate the proposed relationships (diagrams are helpful); b) custom-tailor learner experiences to the relevant goals; and c) design learner experiences while you plan your data collection.

A. Clearly delineate the proposed relationships between learner experiences and learning objectives

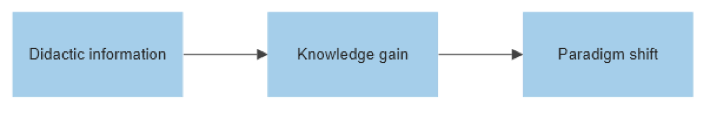

Taking the time to be explicit about the relationships you propose will help clarify the project, and possibly gain buy-in for your ideas. Diagrams are helpful. In the example of the introductory course described above, it is unlikely that simply conveying knowledge would lead to the desirable paradigm shift. (Figure 1)

Figure 1: Conveying knowledge is unlikely to lead to paradigm shift

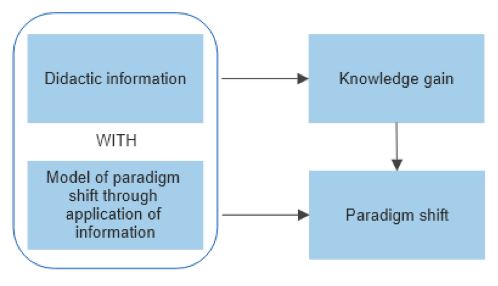

Instead, a learner experience is required to translate the knowledge gained into a change in perception of the situation. (Figure 2)

Figure 2: A learner experience facilitates translation of knowledge to a change in perception

In this case, a scenario is modeled in which greater understanding leads to a reframing of the situation: Each subset of information provided about autism concludes with a learner interaction in which behavior may be seen initially as a child-specific problem (e.g., “he isn’t paying attention to me”), but is reframed as an indicator of the need for support relevant to the information learned (e.g., “he doesn’t understand the nature of back and forth interactions”).

B. Custom-tailor learner experiences to relevant goals

Move beyond multiple-choice knowledge checks to carefully design the experiences that are most likely to result in the desired behavior change. Most people learn best by doing, so instruction should include scenario-based narratives and realistic choices within virtual simulation. This applies equally to eLearning and instructor-led training, where activities like role-plays can be utilized.

Remember the 70:20:10 model and consider creating a blended learning strategy and/or coaching follow-up: eLearning should seamlessly blend into any training that follows.

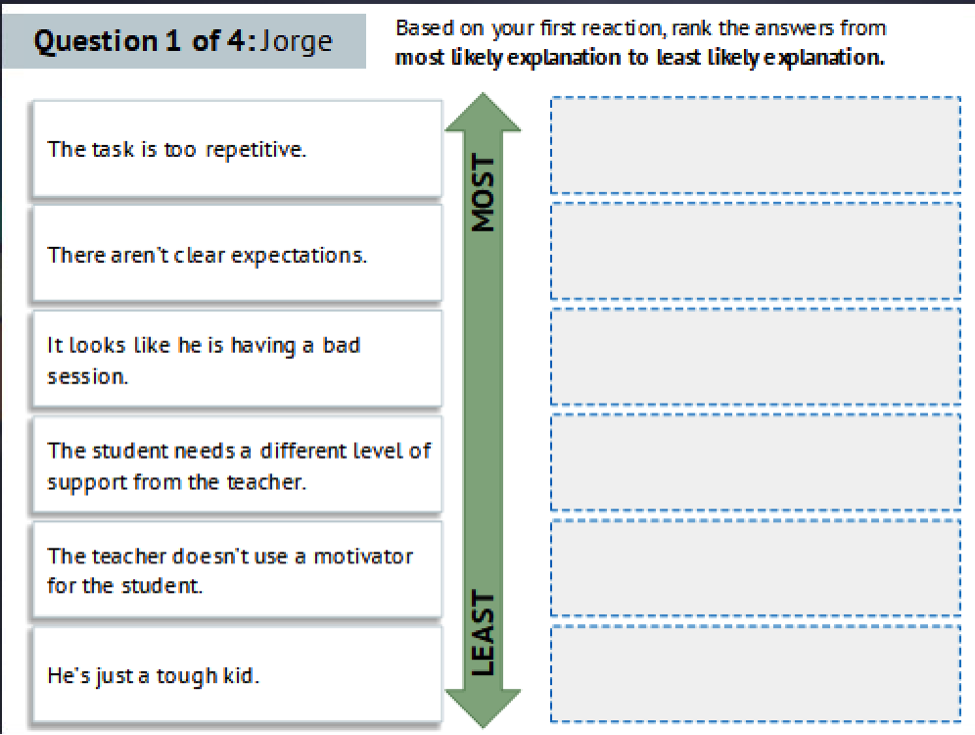

In the example of the reinforcement module, we designed a blended training program that began with highly-interactive eLearning and ended with coaching. Within the online courses, we designed a thoroughly hands-on experience overall, with three students (Jorge, Lucy, and Michael) that learners would virtually support. To support the first student, learners engaged in a virtual conversation with the supervising teacher to create an appropriate plan for reinforcement; the appropriate utilization and monitoring of reinforcement were modeled afterward. To support the second student, learners referenced a plan already created to monitor reinforcement as it was utilized. To support the third student, learners were required to ask for any necessary clarification of an existing plan, as well as to utilize and monitor reinforcement.

The specific learner experiences associated with all three virtual students were mapped to each learning objective (Figure 3).

Figure 3: Mapping learner experiences to learning objectives

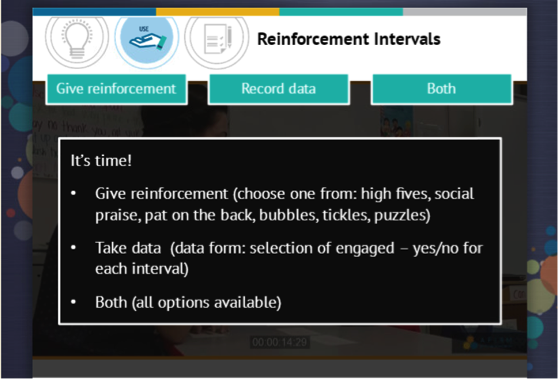

C. Design experiences while planning data collection

Make sure you stay on track by organizing your design at the same time that you plan for data collection. Doing so will not only keep you focused on your eventual goal or summative evaluation—it will also guide your formative evaluation. Plan experiences and interactions that will result in the data that you need. Meanwhile, stay true to your learner experience by seamlessly keeping track of learner choices and hands-on application in ways that require no distracting learner recognition or input. This was our team’s data collection plan for the “use” objective. (Figure 4)

Figure 4: Data collection plan for one objective (Editor's note: Lucy and Michael are two of the three virtual students in item #2 B above)

Science of strategy #3: Planning data collection

Like photography of an event, data is what you will have left after training. Make sure it tells you what you need to know about what happened: It should be meaningful, valid, and complete.

A. Collect meaningful data

In order to understand the relationship between learner experiences and learning objectives, you need to know what the experience actually was. Rather than making do with only binary training completion or even multiple-choice data, build collection into choices within realistic scenarios. Context-situated data is easier to interpret, and more likely to correlate with real behavior after training. An infrastructure that supports xAPI is helpful in this regard.

For example, here are screenshots of the developed third student (Michael) hands-on activity to use and monitor reinforcement. (Figures 5 and 6)

Figure 5

Figure 6

This learner interaction provides a realistic scenario and allows hands-on application of concepts while capturing data about providing reinforcement at the right time (after a desirable behavior), and in the right way (the reinforcer is enjoyable to the student). It simultaneously captures data about monitoring reinforcement.

B. Construct valid measurements

The operationalization or measurement of each concept within your design should be a valid reflection of the concept. For example, if your objective is to change on-the-job behavior, the best measure is observation. If you are looking to measure attitudes or opinions, self-report is best.

For the reinforcement lesson, we observed behavior in the classroom. To measure paradigm shift for the introductory course, we designed pre- and post-tests that measured learners’ internal (student) vs external (task or teacher) causal attributions of undesirable child behavior.

Figure 7: Test design

C. Test each relationship proposed

Plan to test your theory! By collecting the necessary learner activity data to test each of your proposed relationships, you can identify exactly what worked and what didn’t. This allows you to remove the less effective elements of your training and focus on the most effective ones, making iterative development more effective and efficient over time. It also informs your understanding of your learners.

Good strategy is as good for you as it is for your learners. If you take the time to identify learning objectives, design the learning experiences you believe will achieve them, and plan the evaluation of your design, you put yourself in a great position to develop training with confidence, and clearly demonstrate the value of your work.

(Support for the development of the AFIRM for Para work came from Grant R324A170028, funded by the Institute of Education Sciences (IES). IES is part of the U. S. Department of Education, but the contents of this article do not necessarily reflect or represent the policy of that department. )