In the summer of 2009, my friend Saul Carliner, now associate professor and provost fellow for eLearning at Concordia University in Montreal, was part of a discussion in Training Magazine about whether ROI (return on investment) was worthwhile for measuring training’s value.

In that article, Carliner stated, “A 2003 meta-analysis (an analysis of several studies of training practice) by Arthur, Bennett, Edens, and Bell, concluded that 78 percent of all training is assessed for satisfaction. Their study also found that just 38 percent of programs are evaluated for learning, nine percent for transfer, and seven percent for impact. A 2005 study by another research team had similar results.” (See “References” at the end of this article.)

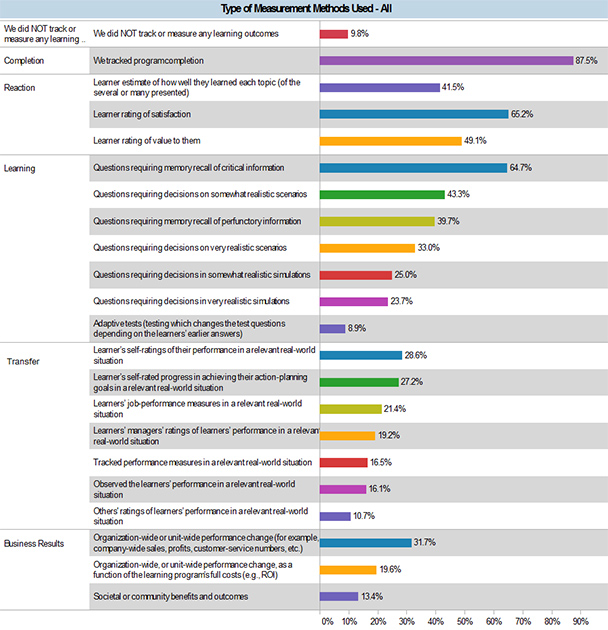

A September 2010 eLearning Guild Research Report, Getting Started in e-Learning: Measuring Success, found that of all of the types of measures used, almost 10% didn’t measure anything and almost 88% only measured completion (Figure 1).

Figure 1: Measurement methods used by respondents in Getting Started in e-Learning: Measuring Success

Figure 1: Measurement methods used by respondents in Getting Started in e-Learning: Measuring Success

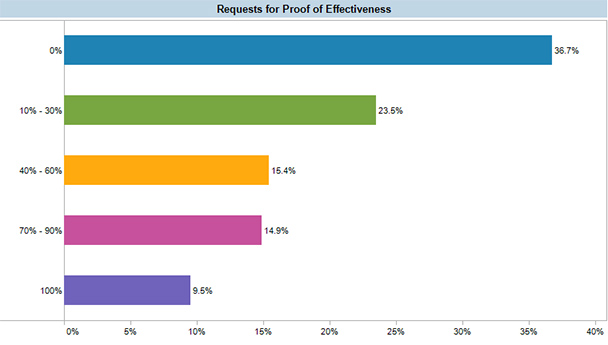

More thought provoking still, the data in this survey also showed that few stakeholders asked for proof that the instruction is effective (Figure 2).

Figure 2: Percentage of respondents in Getting Started in e-Learning: Measuring Success that were asked for proof of

effectiveness

Figure 2: Percentage of respondents in Getting Started in e-Learning: Measuring Success that were asked for proof of

effectiveness

Some people think that doing return on investment (ROI) analysis is the answer. Carliner’s article says that C-level execs emphasize perceptions over measures for a variety of reasons. One is that ROI accounting has serious flaws.

-

Because much training is intended to reduce errors, like scrap, the associated expenses are never incurred. But accounting systems—the ones ultimately used to track ROI—can only handle expenses tracked on the books. Any resulting savings from reduced scrap is, at best, a speculation and lacks credibility to many financial experts. Furthermore, many evaluation experts acknowledge that, even when performance improves, other factors could have contributed to, or been primarily responsible for, the resulting savings, such as new machinery that produces fewer flawed products.

And, he acknowledges, except for the largest training groups, few have the resources or skills for such evaluation activities. And, as you can see in the Guild’s Getting Started in e-Learning: Measuring Success research report, they are not asking for it.

The Guild’s newest research report, Getting Started in e-Learning: Measuring Success, explains that the most fundamental problem with ROI is that it is based on measuring learning “events,” and we now accept that the largest amount of learning in our organizations happens informally and in unstructured ways. Therefore application of the kinds of models that measure ROI of learning events at best provides some indication of application and value gained from a small minority of learning out of context.

Nic Laycock, the author of the report, agrees with Carliner that C-level execs are not nearly as interested in ROI as we think that they are. When he asked a director in a process industry how we should measure L&D outcomes his response was illuminating. “Nothing further than I have a comfort that my people are able, and mentally and physically well-equipped to do their work. I judge it by the lack of frequency with which my direct reports complain about not having the necessary skills available to them. Of course I want to know we are spending our limited resources in an efficient way…”

Laycock explains that a Corporate Leadership Council survey in 2011 indicated that while 84% of executives were happy with training (Towards Maturity reports a contrasting 65% just two years later), less than 15% would recommend the CLO and his or her team to colleagues as a support in solving performance problems. The Council’s more recent work indicates that as global growth gathers pace, performance improvements in the order of 10-20% will be required to ensure entity survival.

How does L&D begin to contribute to performance improvements in the order of 10 – 20%? Beyond ROI: The Value of Learning provides valuable approaches needed by all CLOs for establishing the necessary partnerships and eliciting evidence of effective learning application, in context.

The report contains interviews with a small number of C-level executives who gave their views about the learning function’s importance and contributions. They were also asked what they believe is important to measure in learning in an enterprise context. Finally, the report contains the views of statisticians and metrics experts concerning current leading-edge models and methodologies.

References

Carliner, Saul. “Maybe ROI Really Is a Waste of Time.” Training Magazine. June 2009. http://www.nxtbook.com/nxtbooks/nielsen/training0609/ - /18

Shank, Patti. Getting Started in e-Learning: Measuring Success. The eLearning Guild. 3 September 2010. http://www.elearningguild.com/research/archives/index.cfm?id=145&action=viewonly&from=content&mode=filter&source=archives&showpage=3